Virtualization is an essential part of today's IT landscape. From virtual machines that run on workstations to virtual servers hosted in your organization's network operations center to cloud computing solutions, virtualization drives a vast amount of today's technical solutions.

Virtualization is an essential part of today's IT landscape. From virtual machines that run on workstations to virtual servers hosted in your organization's network operations center to cloud computing solutions, virtualization drives a vast amount of today's technical solutions.

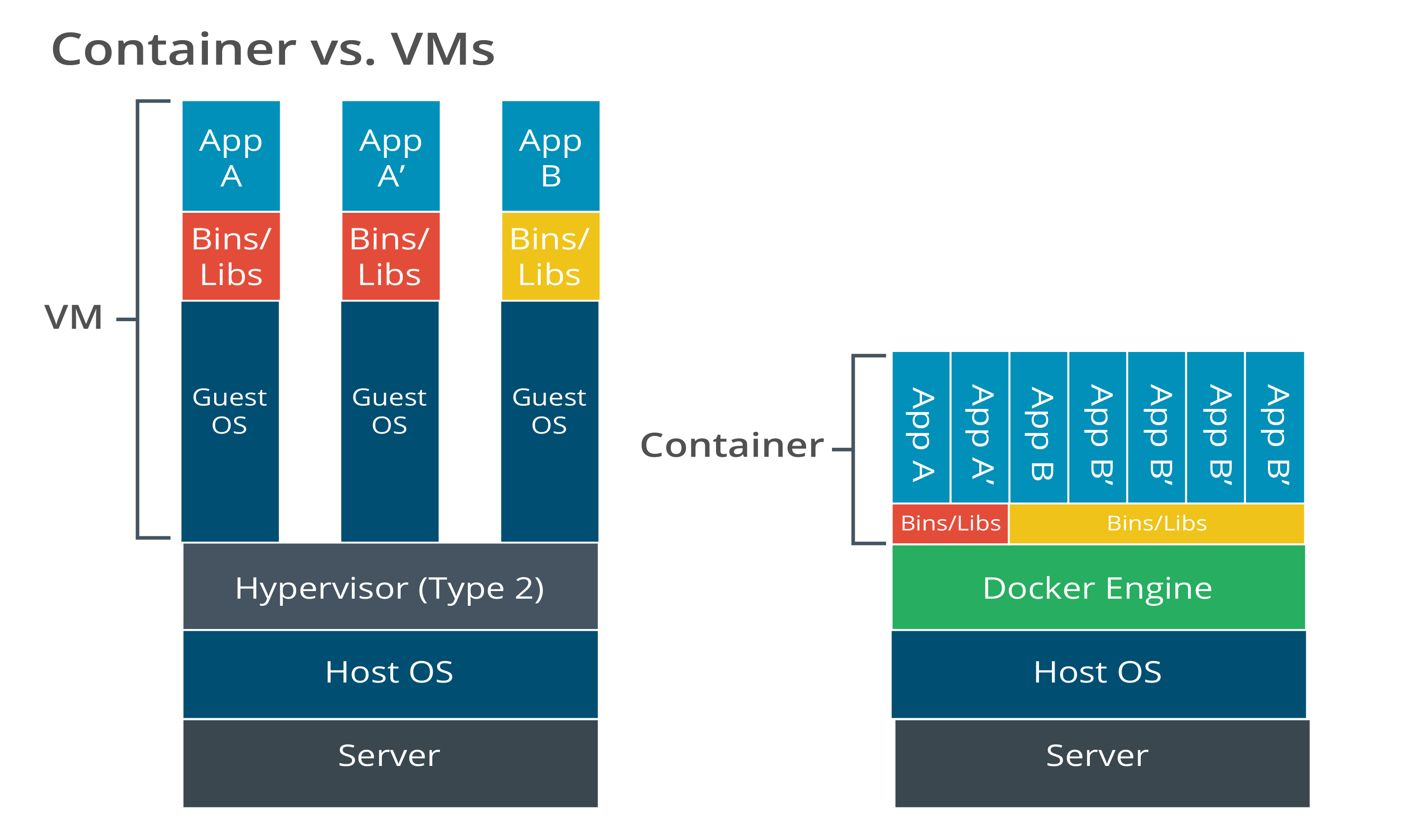

One approach is virtualizing at the hardware layer using software that partitions physical hardware among one or more virtual machines (VMs). Operating systems and applications are installed on these VMs, which then function like regular computers. Another approach is to virtualize one layer up, at the operating system level. Containerization provides much smaller, more robust and portable solutions that include applications running in isolated containers.

Both virtualization solutions are essential, but they solve different problems and are used in different settings. This article explains containers and the software they run on, along with offering use cases and a do-it-yourself example of deploying a simple container.

What Are Containers?

Containers are small, prepackaged software units that run atop a host operating system, such as Linux, Windows or macOS. Containers include all dependencies and libraries needed to run their constituent code. The benefit is quick deployment, scalability and rapid changes.

Users can run containers on desktop systems, servers in an on-premises data center or cloud servers. Today, many organizations rely on on-premises or cloud containerization for some or all of their services.

The benefits of containerization include:

- Separating responsibility between developers and administrators for applications

- Providing portability, allowing applications to run nearly anywhere, including developer workstations, user computers, servers, cloud infrastructure, inside virtual machines, etc.

- Providing application isolation because containers are discrete components focused on a single application

- Enabling more efficient operations, including quickly deploying and modifying container-based applications

- Allowing agile DevOps-based development practices that avoid dependency and version issues by housing everything needed in the container image

When to Use Containers

Developers and administrators have many deployment choices, including running applications from desktop systems, on-premises bare metal or virtual servers, the cloud and containers. The first decision is to select one of these platforms.

One variable is to consider the type of application you're managing. Older monolithic applications may be better off on more traditional bare metal or virtual servers. However, if your development team is building a new application, then starting fresh with containers might be the best choice.

Another consideration is the number of moving parts in the application. Containers often provide microservices—small bits of code easily changed and independent of other components. If this sounds like your application, then containers may be for you.

Containers run on host systems, which may be virtual or physical. A host system is called a node. Multiple containers (a multicontainer deployment) can run on a single node, sharing its available resources. Use cases include developer testing, internal implementations or applications that process cached data. One concern with single-node multicontainer deployments is the lack of fault tolerance. Multicontainer solutions are often defined using Compose, a tool for designing and managing multicontainer applications using a YAML file. "Yet Another Markup Language" (YAML) files are commonly used to define container configuration. YAML files have a specific format and syntax.

Most container deployments occur on multi-node server clusters. These clusters provide far more scalability and fault tolerance than a single-node solution.

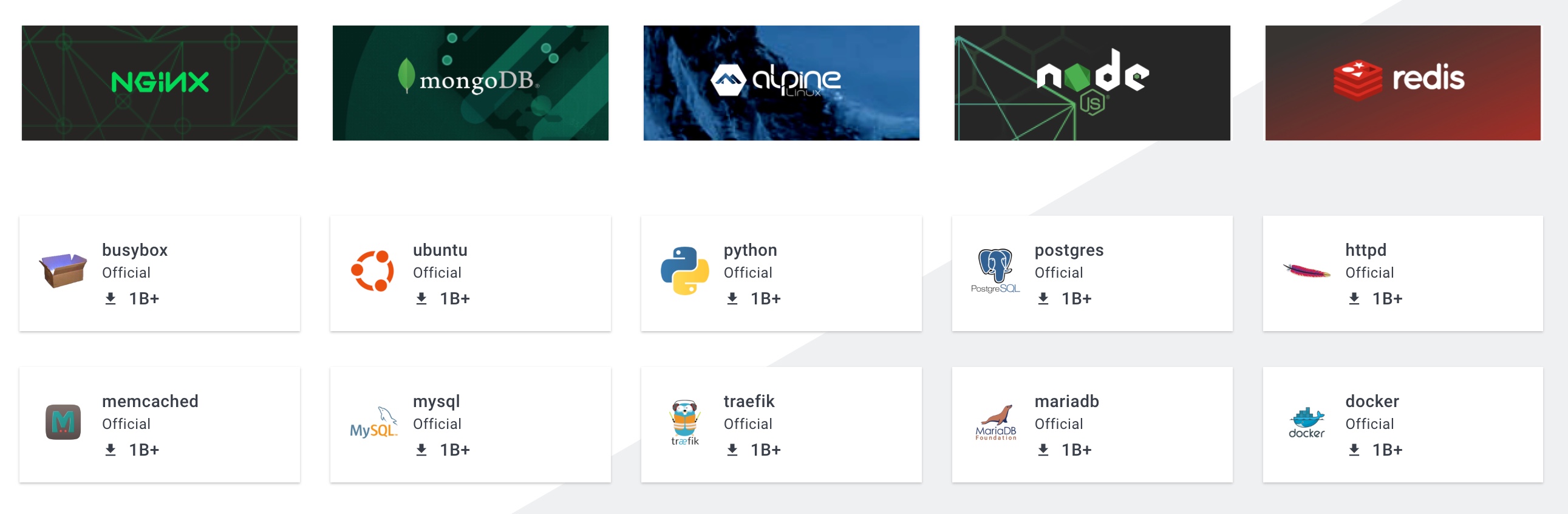

Containers typically deploy applications developed in-house. However, there are many use cases for containerized solutions using existing software. For example, Docker has sample application containers that include Python, Java, Nginx, MariaDB, WordPress and even a Minecraft server. Another intriguing solution is running the Tor browser in a container for another layer of anonymity.

Container Engines

Container engines are the software that manages one or more containers on a host system. The engines use the Open Container Initiative (OCI) image standard, making new images more consistent and predictable.

Container engines do the following:

- Accept user commands and input

- Pull containers from a registry

- Provision container mount points

- Manage the container runtime (start, stop, etc.)

Common container engines:

- Docker: Includes Windows, Linux, macOS, free and paid versions. It is the dominant container engine, and it includes CLI and Desktop versions.

- Podman: Popular open source alternative to Docker with options to run rootless containers and has a daemonless architecture. It runs on Windows, Linux and macOS. Its commands are similar to Docker, making it easy to migrate.

- runC: An open source engine to run containers from the CLI.

- Windows Containers and Hyper-V Containers: Two separate solutions for running containers on Windows systems. Hyper-V Containers provides a VM host for containers, further isolating them for security and portability.

Container engines expose and share CPU, memory, storage and network resources across containers.

You will need to select a container engine as you begin exploring the wide world of containerization. I suggest starting with Docker or Podman, since both are common and well-documented.

Container Registries

Container images are stored in registries. Registries may exist within a private, isolated local area network (LAN), in a cloud environment or through public repositories such as DockerHub. They store and centralize images for easier version control, sharing and availability. Developers may download (pull) or upload (push) images. Common registries include Azure Container Registry (ACR), Amazon Elastic Container Registry (ECR), GitLab and Quay, among others.

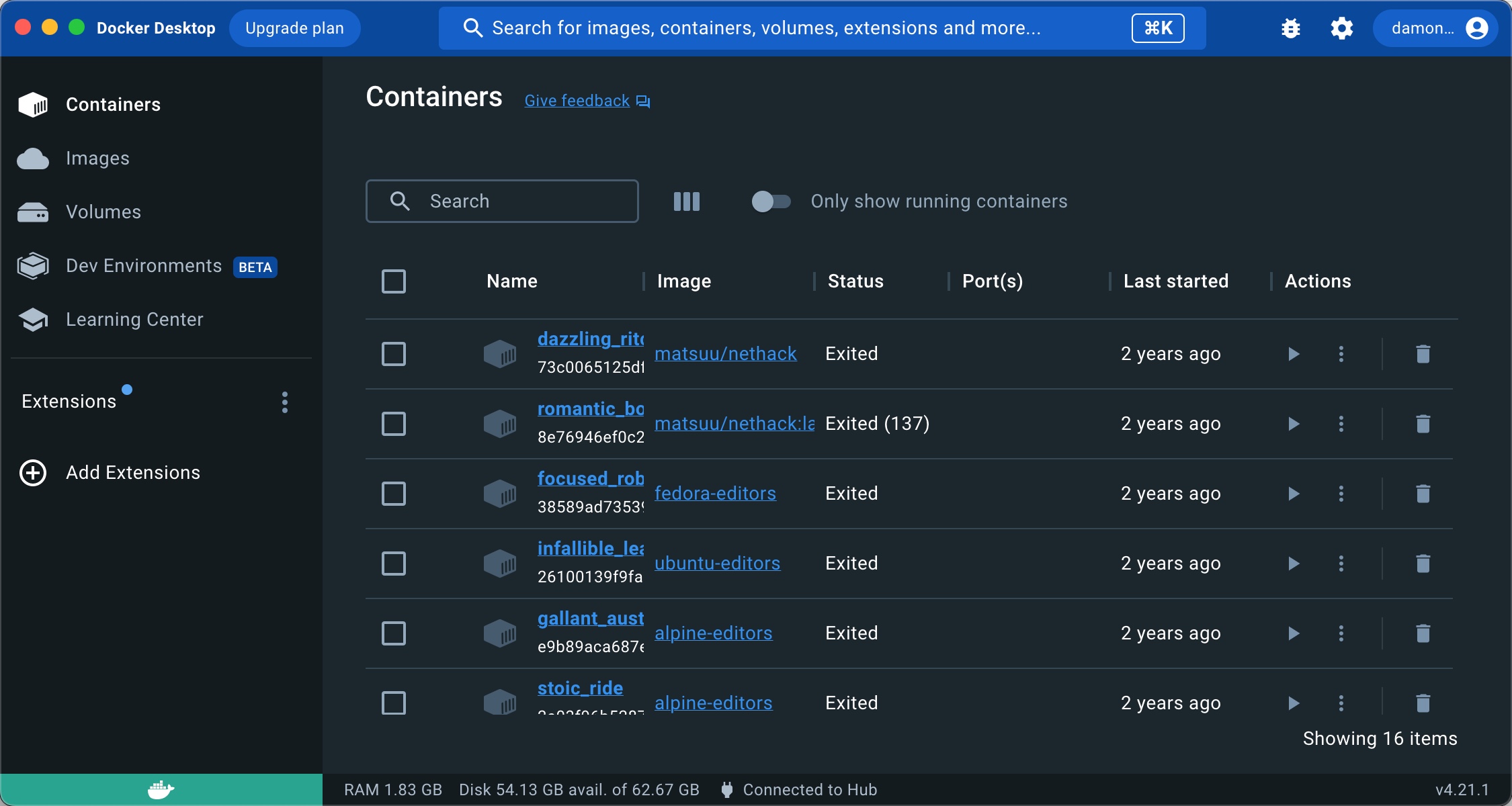

Manage Containers From Your Desktop

A great way to get started with container management is to use the Docker Desktop. This graphical application makes managing containers easier than running command-line commands. If you'd rather work with Podman, download this desktop application.

Container engines include various command-line utilities to manage containers. These tools enable the administration of containerized workloads, installation, runtimes, configuration options, networking and engines.

How Do Containers Work?

The container engine is available to permit connectivity and service between the container and the host operating system kernel. You will need some components before running containers.

Understand Image Files, Images and Containers

You need some basic vocabulary before beginning container operations. Three key terms or container concepts are image files, images and containers:

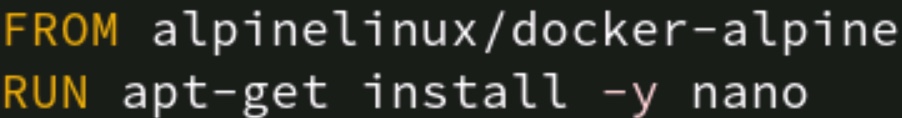

- Image files: Text files containing the commands and configurations necessary to build an image, these may be called Dockerfiles or Containerfiles

- Image: A template for building an image to determine how it will function when running

- Container: A running instance of an image

Containers must run from an image, and images are built from image files.

For example, the docker build command constructs container images from image files.

Container Lifecycle

The following steps describe the lifecycle of a running container, showing the basic phases and services involved:

- The user initiates the container

- The container image is stored locally or pulled from a registry

- The container image is extracted and container layers are overlaid to create a complete filesystem

- The container mount point is specified

- Container settings from the image are set to customize and configure the container

- The container is given a namespace, which is passed to the host OS kernel for resource access, isolation and limitation

- The host OS kernel starts the container

- Additional security, such as SELinux, is configured

- The container executes its code to accomplish its given task

- The user shuts down the container, reversing the resource allocations listed above

Run a Container

You can start with container operations pretty easily. The following steps run the test hello-world image before downloading an actual production image called Alpine Linux. Another common image to get started with is BusyBox. BusyBox is a tiny Linux distribution with many standard utilities and applications.

The following steps install Docker on a Red Hat-derived Linux system. If you use a Debian-based Linux box, such as Ubuntu, use the apt package manager instead. Follow the appropriate installation instructions for macOS or Windows. If you have an extra Raspberry Pi, that's also an option.

Depending on your Linux system's configuration, you may need to use sudo for these commands.

1. Install the Docker container engine on a Fedora Linux system using these commands:

$ sudo dnf -y install dnf-plugins-core

$ sudo dnf config-manager --add-repo https://download.docker.com/linux/fedora/docker-ce.repo

$ sudo dnf install docker-ce docker-ce-cli containerd.io

This command installs the basic Docker components, including the containerd runtime environment, which handles the entire container lifecycle. Additional plugins and support software are available online. Another common runtime is CRI-O (Container Runtime Interface using Open Container Initiative runtimes).

2. Start and enable Docker:

$ sudo systemctl start docker

$ sudo systemctl enable docker

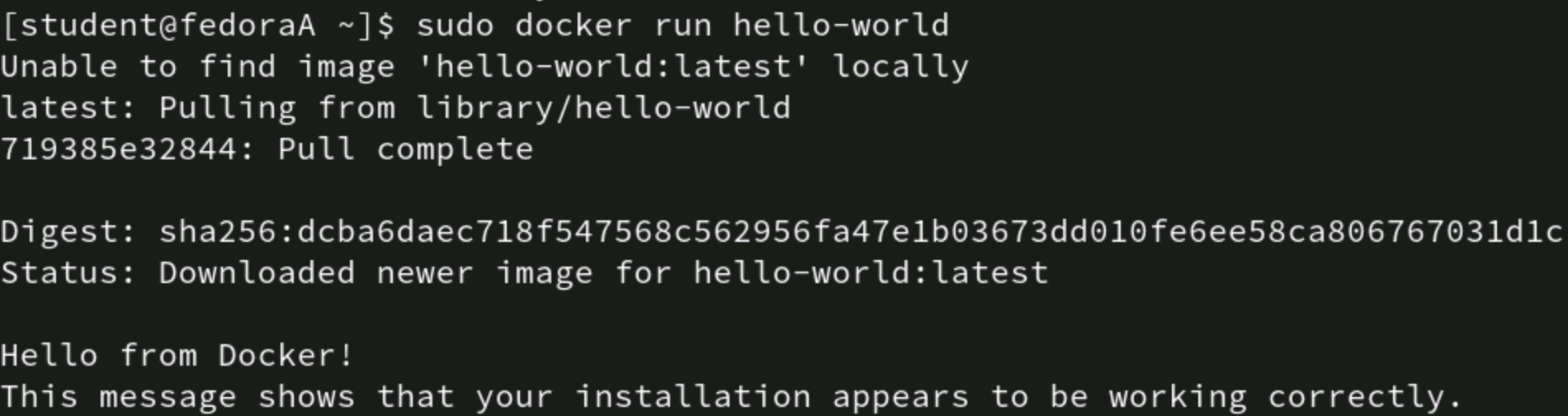

3. Run the traditional test container image:

$ sudo docker run hello-world

This image proves Docker is installed and can run containers. You can begin to use functional containers at this point.

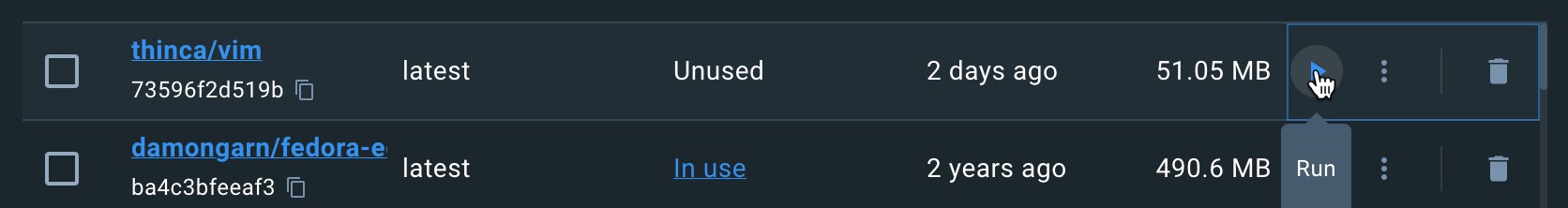

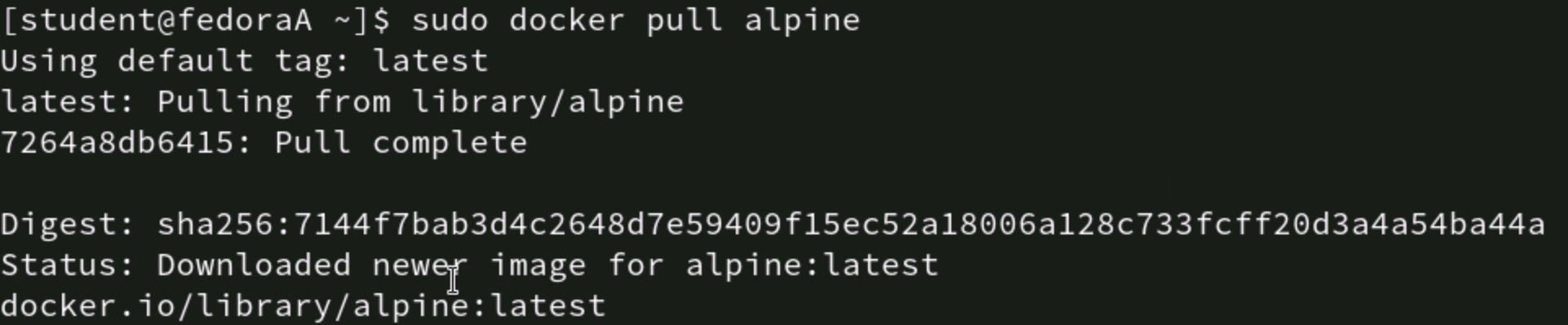

4. Pull the Alpine Linux image:

$ sudo docker pull alpine

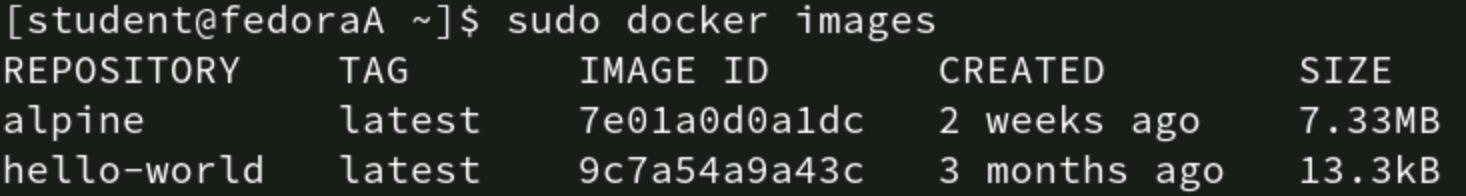

5. List images using the docker images command. In this case, you should see the alpine and hello-world images. Confirm the image is present by listing the images:

$ sudo docker images

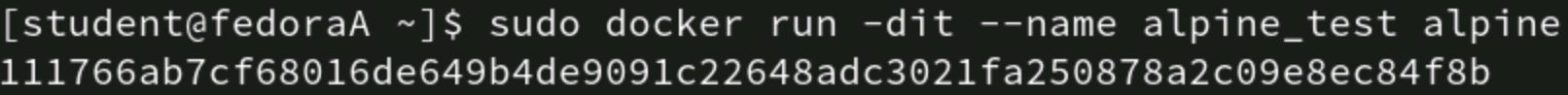

6. Run the alpine container from the image:

$ sudo docker run -dit --name alpine_test alpine

The options -dit add functionality to the container. The d option runs the container in the background, while the it options provide an interactive interface through a pseudo-TTY connection. The --name option allows you to provide a logical name to the container instance. If you do not specify a name, the container engine generates a random one.

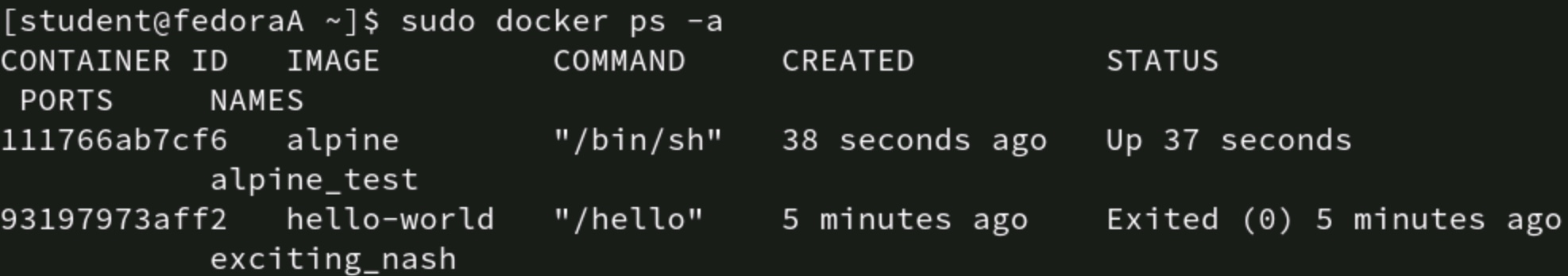

7. Use the following command to see any container instances you have run:

$ sudo docker ps -a

8. Use the stop subcommand to halt the Alpine container:

$ sudo docker stop alpine_test

Use the sudo docker ps -a command again to check the status of alpine_test. It should show Exited.

Congratulations! You've just entered the world of containerization by pulling, starting, and stopping two containers. Your next step is finding webservers or other containerized applications to deploy.

The alpine and hello-world images are prebuilt and pulled from a registry. You can customize your own Containerfiles to create exactly the image you need for your application.

Other Container Operations Tasks

The above examples pulled container images from an existing repository. A push action uploads an image to a repository to share it with other users or on other machines. Before pushing an image up, you may need to build it. Building an image assembles the image from the instructions found in the image file. Remove images from the system with the rmi subcommand.

Containers vs. Traditional Virtual Machines

When choosing virtualization options, it's rarely VMs versus containers. Each has an essential role. Consider the following points when selecting containers or virtual machines.

When to Use Virtual Machines

Use virtual machines when addressing infrastructure:

- Virtualizes at the hardware layer

- Includes the operating system in the image

- Need the OS itself, perhaps to run an older application or to check application compatibility with a specific OS version

- Provide functionality normally deployed as part of an operating system, such as name resolution, DHCP or directory services

- Running legacy monolithic applications that cannot be easily containerized

When to Use Containers

Use containers to solve application problems:

- Virtualizes at the operating system layer

- Does not include the operating system in the image

- Need application portability and management without being tied to specific hardware or a particular operating system

- Need quick application deployment and scalability

- Multi-cloud and hybrid-cloud environments

- As part of a DevOps approach to application development, especially for microservices

- Creating new modular applications with future scalability and modification in mind

- Need less resource overhead on the host system than VMs

And don't forget that containers can run on virtualized systems.

When Not to Use Containers

Containers are a great innovation, and they play a critical role in today's IT landscape, but they aren't appropriate for every situation. Do not use containers in this scenarios:

- When you need a graphical user interface: It is possible to run a GUI in a container, but it's challenging and not typically useful

- When you have security concerns and a requirement for application isolation: Containers share an operating system, opening a potential security hole

- When managing small-scale application deployments: A more traditional application installation is probably best unless you run containers regularly

Learn Your Container Engine’s Syntax

There is a misconception that containers are difficult to work with. The concept is certainly different from other application hosting approaches, but it's pretty straightforward once you learn your container engine's syntax. After all, containers are meant to be more technology-agnostic than other IT tools.

Another pitfall is not understanding whether you need containers or virtual machines for a solution. The correct choice depends on what application issue you're attempting to resolve.

As you delve further into containerization, you'll quickly run across references to Kubernetes, a mechanism for automating container scaling, deployments and applications. Kubernetes (also abbreviated "K8s") organizes groups of containers into pods. Pods share networking configurations and storage resources among containers for simpler management. For example, a pod may contain a container with reference files and a sidecar or supporting container that updates the files. Ambassador containers provide a single interface between the containers in the pod and the outside network that accesses the resources.

I suggest getting started with Docker or Podman. Work through the tutorial above before attempting more complex solutions. The concepts and practices you learn apply to many common DevOps and infrastructure environments out there. Your career will thank you later!

Learn the skills you need with CompTIA CertMaster Learn. Sign up today for a free trial today!